Looking for cracks in the standard cosmological model

New computer simulations follow the formation of galaxies and their surrounding cosmic structure with unprecedented statistical precision

Donostia / San Sebastian (Basque Country). 18 July 2023. An international team of astrophysicists, including researchers at the Donostia International Physics Center in Spain, has presented a project that simulates the formation of galaxies and cosmic large-scale structures, throughout staggeringly large swaths of space. The first results of their “MillenniumTNG” project have just been published in a series of 10 articles in the journal Monthly Notices of the Royal Astronomical Society. The new calculations will help to subject the standard cosmological model to precision tests that could elucidate fundamental aspects of the Universe.

Over the past decades, cosmologists have gotten used to a perplexing conjecture: the Universe’s matter content is dominated by a strange “dark matter” and its accelerated expansion is caused by an even stranger “dark energy” that acts as some kind of anti-gravity. Ordinary visible matter, which is the source material for planets, stars, and galaxies like our own Milky Way, makes up less than 5% of the cosmic mix. This seemingly strange cosmological model is known under the name ΛCDM.

ΛCDM can explain a large number of cosmological observations, ranging from the cosmic microwave radiation – the rest-heat left behind by the hot Big Bang – to the “cosmic web’’ arrangement of galaxies along an intricate network of filaments. However, the physical nature of the two main ΛCDM ingredients, dark matter and dark energy, is still not understood. This mystery has prompted astrophysicists to search for places in the ΛCDM theory where its predictions would fail. However, searching for “cracks” requires extremely sensitive new observational data as well as precise predictions about what the ΛCDM model actually implies.

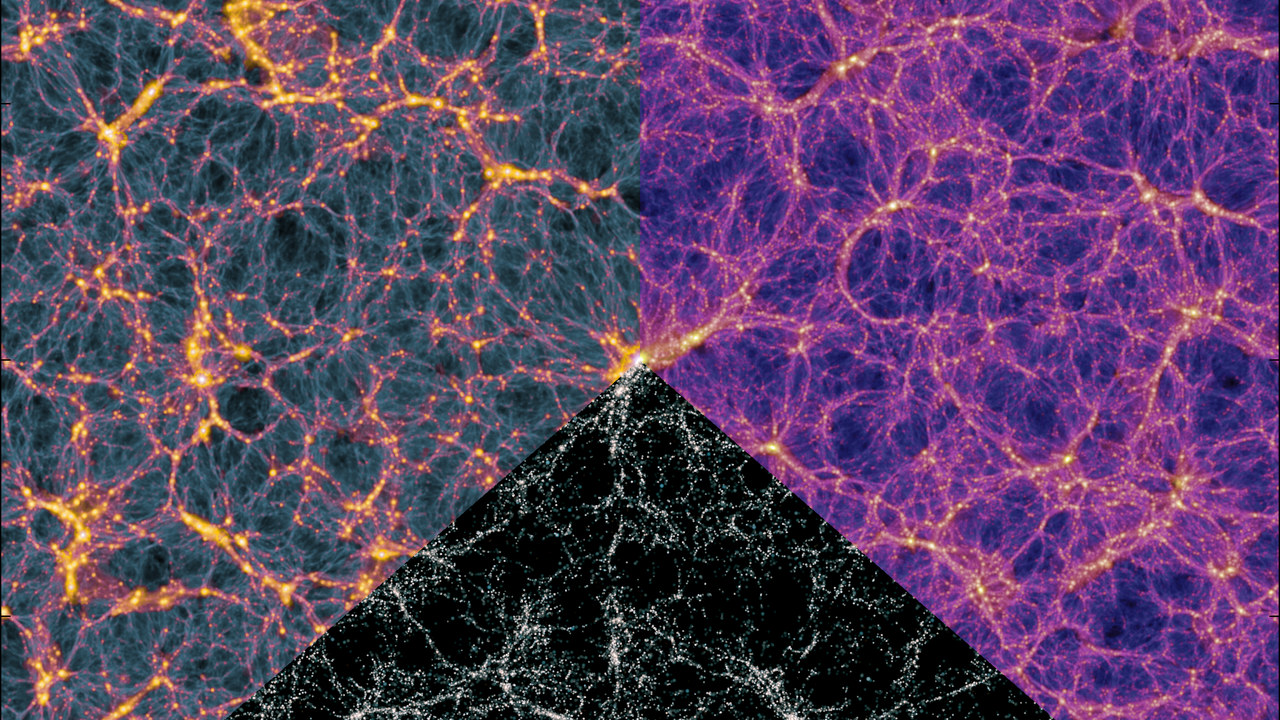

This week, an international team of researchers at the Donostia International Physics Center in Spain together with the Max Planck Institute for Astrophysics, Harvard University, Durham University, and York University have made a decisive step forward on the challenge of understanding the ΛCDM model. They developed the most comprehensive suite of cosmological simulations to date. For this “MillenniumTNG” project, they used specialized software on two extremely powerful supercomputers (Cosma8 in the UK and SuperMUG in Germany) where 300,000 computer cores tracked the formation of about one hundred million galaxies in a region of the universe around 2300 million light years across (see Figure 1).

The MillenniumTNG simulates the processes of galaxy formation directly over volumes so large that they can be considered representative of the Universe as a whole. These simulations allow for a precise assessment of the impact of astrophysical processes such as supernova explosions and supermassive black holes on the distribution of cosmic matter. These calculations also directly simulate the presence of massive neutrinos. These are extremely light fundamental particles that make up at most 2% of the mass in the Universe. Despite being very small, the data of cosmological surveys, such as those of the recently-launched Euclid satellite of the European Space Agency, will soon reach a precision that could allow the first detection of neutrinos in the cosmos.

The first results of the MillenniumTNG project show a wealth of new theoretical predictions that reinforce the importance of computer simulations in modern cosmology. The team has written and submitted ten introductory scientific papers for the project which have just appeared simultaneously in the journal MNRAS.

One of the studies, led by the Donostia International Physics Center, focused on creating tools that quickly generate millions of virtual universes adopting different assumptions about the cosmological model. “The MillenniumTNG project has allowed us to develop new ways to learn about the relationship between dark matter and galaxies, and how we can use it to find cracks in the ΛCDM model“, says Dr. Sergio Contreras, first author of this study of the MTNG team who analysed the data using supercomputers at the DIPC Supercomputation Center. Thanks to machine-learning algorithms, the team was able to predict how the universe would look if neutrinos had different masses or if the law of gravity was different to that proposed by Einstein. “In the last years we have seen a revolution in how we build computer models, and it is exciting to see how many new doors they have opened to understand fundamental aspects of the cosmos”, says Prof. Raul Angulo, a researcher at DIPC.

Other studies of the MTNG project include how the shape of galaxies is determined by the large-scale distribution of matter; predictions for the existence of the population of very massive galaxies in the young universe recently discovered with the James Webb Space Telescope; and the construction of virtual universes that contain more than 1 billion galaxies.

The flurry of first results from the MillenniumTNG simulations makes it clear that they will be of great help to design better strategies for the analysis of upcoming cosmological data. The team’s principal investigator, the director of the MPA Prof. Volker Springel, argues that “MillenniumTNG combines recent advances in galaxy formation simulations with the field of cosmic large-scale structure, allowing an improved theoretical modelling of the connection of galaxies to the dark matter backbone of the Universe. This may well prove instrumental for allowing progress on key questions in cosmology, such as how the mass of the neutrinos can be best constrained with large-scale structure data”. The main hydrodynamic simulation took approximately 170 million CPU hours, which is equivalent to running a quadcore computer for nearly 5000 years, and the entire project produced more than 2 Petabytes of simulation data, forming a rich asset for further research that will keep the participating scientists busy for many years to come.